If you’ve worked with AI-generated 3D, you know the frustration. A model looks perfect in a preview, then falls apart in your hands—literally. Rotate it, and surfaces warp. Import it into Unreal Engine, and the mesh is non-manifold. Try to 3D print it, and the software rejects it. This isn’t bad luck. It’s the predictable failure of a dominant approach that prioritizes visual illusion over geometric truth. This is the problem that Neural4D can solve by engineering 3D models from space, not from images.

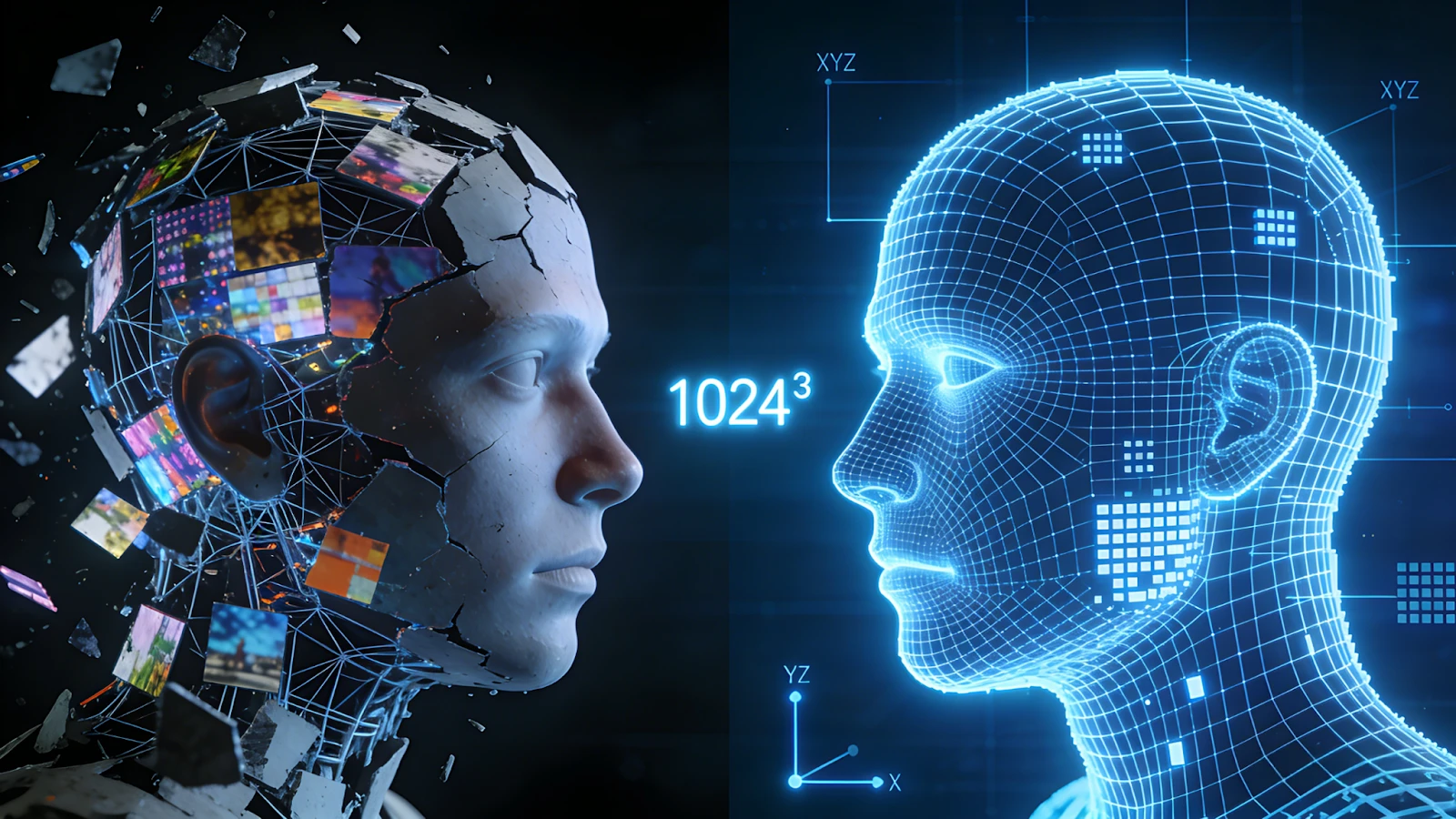

For years, the field has relied on a sophisticated workaround: use a 2D image model to generate multiple views, then painfully stitch them into a 3D shape. It’s reconstructing a sculpture from its shadows. The result is “geometric hallucination”—a form that pleases a static render but collapses under spatial scrutiny. The industry has hit a ceiling on this path. Game studios, product designers, and architects don’t need more beautiful demos; they need watertight, lightweight, production-ready assets. They need engineering, not just art. This growing chasm between AI output and industrial input is why a paradigm shift is inevitable. Neural4D’s approach represents that shift.

The Diffusion Ceiling: When 2D Logic Meets 3D Reality

The core issue with diffusion-based 3D generation is its origin. These systems are built on a statistical model of pixels, not a logical model of space. Their “understanding” is a correlation of image captions, not an internal blueprint. They can infer that a “table” has “four legs,” but they possess no inherent reasoning for how those legs connect to the tabletop, distribute weight, or form a solid, continuous surface.

When these models are generated, they perform high-dimensional interpolation between 2D concepts. There is no persistent, authoritative 3D coordinate system guiding the process. This leads to three fatal flaws for any practical use:

- Unusable Topology: The output mesh is often dense and irregular, riddled with holes and non-manifold edges that crash 3D software and fail 3D printing prep tools.

- The Scaling Trap: Achieving higher resolution isn’t a linear compute problem. In a 2D-diffusion pipeline, more detail often means exponentially more effort to reconcile contradictions between countless generated viewpoints.

- The Black Box: How do you edit what you’ve made? Want to change the pose of a generated character? You start over with a new text prompt, hoping the geometry doesn’t fracture. There’s no underlying structure to manipulate.

The industry has paid a tax in computer and manual labor to clean up these outputs. It’s a bottleneck disguised as a workflow.

Building Space, Not Pictures: The Neural4D Approach

The alternative path is radical in its simplicity: instead of reconstructing 3D from 2D fragments, build the 3D representation natively and intelligently from the ground up.

This is the architecture behind Neural4D’s Direct3D-S2. Its core is a Spatial Sparse Attention (SSA) mechanism. Forget generating a dense 3D block all at once. SSA works like a precision engineer. It treats 3D space as a sparse, hierarchical field, placing its computational focus only on regions where matter exists. It starts with a coarse shape and recursively subdivides, adding detail only where it’s needed. This isn’t image synthesis; it’s structured spatial reasoning.

This methodology aligns with how graphics pipelines actually work. Game engines and CAD software consume clean, efficient geometry. By building a native sparse 3D representation, the Neural4D approach inherently promotes coherent structure.

The technical outcomes validate the premise:

- Resolution with Purpose: This spatial-first logic is what enables Neural4D’s leap to 1024³ native resolution. Detail is added intelligently, like fine gear teeth or fabric weave, keeping the overall model efficient and game-ready.

- Inherently Clean Geometry: The process naturally tends toward watertight surfaces and cleaner topology. By reasoning about occupied space logically, it avoids the impossible gaps and internal flaws that plague diffusion methods.

- The Pathway to Control: A model built within a logical spatial framework has an inherent scaffold. This explains how a system like Neural4D can offer conversational editing (as seen in Neural4D-2o)—you’re not begging a prompt for a new variation; you’re instructing the system to adjust parameters within a stable geometric framework.

The New Benchmark: From Demo Asset to Drop-In Tool

The implications are immediate and practical. The benchmark for 3D AI is no longer a pretty turntable video. It’s “Can this model be textured, rigged, and dropped into a scene before my coffee gets cold?”

When geometry is generated with native spatial logic, it arrives pre-sanitized for industry workflows. It dramatically reduces the “AI tax” from days of manual cleanup to minutes of final polish. This transforms the AI from a concept generator into a reliable production asset.

The conversation has moved past visual fidelity. The next frontier is spatial intelligence—the capacity to generate not just form, but function; not just appearance, but architecture. This is the intelligence Neural4D is coding into its spatial engine. The goal isn’t to generate novel shapes for a portfolio. It’s to forge the reliable, geometric foundation for the virtual worlds being built right now.